The 3 Most Common Types of Facial Deepfakes Explained

GenAI, deepfakes, and digital injections. We hear these terms everywhere, but what exactly do they mean? Today, we’re taking a deep dive into how facial deepfake technology works.

Prior to the last 2-3 years, identity fraud relied mostly on physical spoofing techniques, such as printed photos, silicone masks, and videos shown on displays. Fast forward to 2025, and the threat landscape has evolved dramatically. Since the age of sophisticated generative AI models (such as StyleGAN [1] or Stable Diffusion [2]), more and more attacks are being committed through digital means.

Most commonly, genAI can be divided into textual, audio, and visual form. Each of these categories can be further split into generic and domain-specific generation. When it comes to identity verification and biometric authentication, the human face is a key identifier. As a result, fraudsters frequently exploit domain-specific facial generation techniques to try to bypass such systems. As we will see further, even this narrow domain is very rich and full of complexities. For a comprehensive study on generic generation, refer to [3], for example.

Generally speaking, facial generation can be divided into images and videos. Historically, image generation has always yielded better or more realistic results compared to videos, given that generating a single image is simpler than generating hundreds or thousands of frames that follow the laws of physics and natural human behavior patterns. The first AI-generated “Will Smith Eating Spaghetti” video, which went viral on Reddit in 2023, demonstrated this [4].

Deepfake technology falls on a wide spectrum, spanning synthetic faces to face swaps. Some deepfakes are generated in real time. Others are carefully pre-recorded and injected into verification pipelines. Regardless of the method, the deepfakes used in identity fraud all have one goal: to bypass security systems with a fake or stolen identity. Below we explain the three most common types of facial deepfake technology used to commit identity fraud.

Synthetic Faces, Face Swaps, and Face Animations

Synthetic Faces

This is probably the most common type of facial deepfake technology used today.

A synthetic face is an image or video generated by a generative model without the need of a target identity. In other words, it is a face that doesn’t necessarily exist, but looks realistic, given that it is based on the millions of images or videos of faces that the generative model was trained on.

There are two dominant types of models for generating synthetic faces: Generative Adversarial Networks (GANs) and Diffusion models. The most prominent example among GAN models is the StyleGAN 1, 2, 3 series. However, diffusion models are getting more attention recently due to their ability to make even more realistic images and videos.

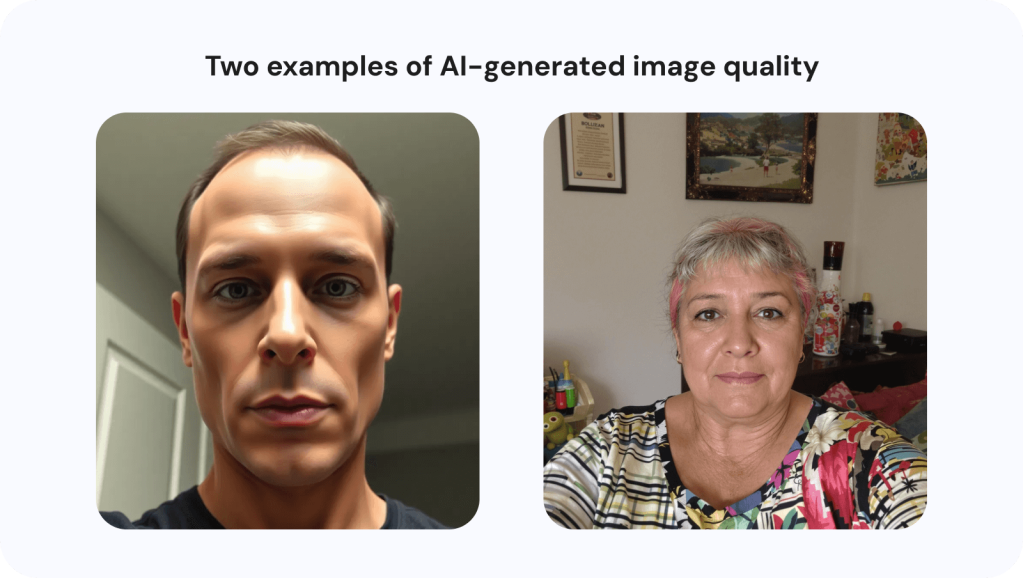

Ten years ago, GAN models created simplistic, semi-realistic faces with many artifacts. Five years ago, faces became photorealistic but suspiciously perfect: ideal lighting, smooth skin, no wrinkles or pimples. Today, genAI models (mostly diffusion) can generate faces that capture the roughness and imperfections of reality.

Besides research papers and github repositories, there are multiple online tools that provide synthetic face generation features for free. Of course, higher quality usually comes at a higher price, but nevertheless it is extremely cheap.

The image below on the left is generated by a simpler generative model of a previous generation. It is cartoonish and simplified. The image of the woman beside it is much more realistic, with wrinkles, a natural expression, and even a chaotic background that mirrors real life. Yet, it’s AI-generated (Flux AI [5]).

Synthetic video generation took a little longer to catch up with the realism and coherence of still image generation. The informal “Will Smith Eating Spaghetti” benchmark [6] is a clear example of these dynamics. In March 2023, the video was like a surreal dream, while the Veo 3 [7] generated video in April 2025 looks realistic and has no visible artifacts.

In the context of face liveness and identity verification, we face synthetic faces when fraudsters try to create fake identities. By having a completely new AI-generated person, they ensure that face recognition will not match it to an existing user.

Given the easiness of generating new images or videos, fraudsters can generate thousands of new identities in a single run and perform a large scale attack on an app.

💡

It is still common to hear that people can detect deepfakes by looking for artifacts. While this was true several years ago, and a hand with the wrong number of fingers or a wall clock in the background displaying the wrong digits could help identify a deepfake, nowadays it is not safe to rely just on our eyes. We require specialized tools to assist us with this task.

Face Swaps

Another common form of deepfake attack is the face swap. As opposed to synthetic faces, face swap methods for images and videos evolved in parallel. This is partly because, when a target video is available, it’s more feasible to generate a convincing result by overlaying a new identity onto the existing footage.

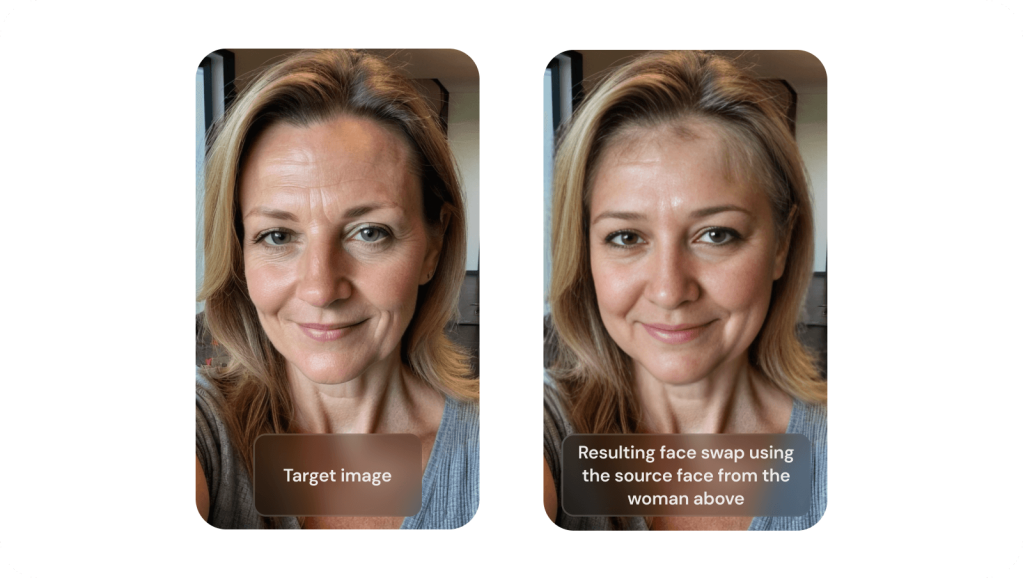

A face swap is an image or video that places the source identity’s face onto an image or video with another person. In other words, face swap techniques replace a face from one medium with a face from another. The target image or video provides the content and action, while the source image supplies the identity.

The very first face swap methods didn’t use any generative AI. Instead, an algorithm detects 68 facial landmarks [8] on both the source and target faces, aligns them, and overlays the source face onto the target image.

Of course, this method would produce artifacts, especially in cases of different skin tone or facial hair, however, it was available even 10-20 years ago. With time, these approaches were augmented with additional neural enhancement, and finally face swaps are also now created with generative models, just like synthetic faces.

Let’s imagine we want to use the identity of the synthetic woman above to generate a face swap. Using the freely available tool Vidnoz [9], we provide the target image (left image below), the source face (the woman’s image above), and the result is a realistic face swap (right image below), in which we can clearly identify the woman.

There are hundreds of online tools and open-source repositories available with image or video face swaps, that include a variety of features like resolution, swapping multiple faces, etc.

At Incode, we often observe face swap attacks in production. One of the clearest indicators of a face swap is the repetition of the same background, person, lighting, and other visual parameters across multiple videos or images, in which the only element that changes is the face and its identity. Usually, this technique is combined with ID fraud, such as using a stolen or fake ID card.

Face Animation

This type of deepfakes is increasingly being used by fraudsters.

Face animation, or face reenactment, is a video generated from a source image by adding motion or video effects. In other words, it makes the person in the selfie talk, smile, move, and blink, all while respecting the identity and the physical conditions of the original photo.

This attack is different from the previous two because it doesn’t use fake identities, nor does it swap identities. It brings still—sometimes AI-generated—images to life.

There are several major ways of generating a face animation, and they differ by what’s driving the animation

- another video of (another) person—the most common one, the easiest to generate. An example of animating Mona Lisa with this approach from 2019 [10]

Click here to accept marketing cookies and load the video.

- audio file of someone talking—based on this, a lip sync video will be generated

- text—similar to the above method; it first generates the audio file with text-to-speech and then proceeds as above

- prompt—this is the newest and most advanced animation method; it makes a video just based on a single prompt.

The technology behind these animation methods is similar to what we discussed earlier, typically using diffusion models or GANs. However, another common approach involves key-point-based geometry warping algorithms combined with custom CNN or Transformer architectures that generate pixel values. Despite the specific technical approach, today’s state-of-the-art models can create remarkably realistic animations.

Below is the woman from the synthetic image above that we animated with the free Vidnoz tool. The prompt that we used was “A woman waiving and happily smiling”. As we can see, the tool decided to skip the first part of the prompt. Also, the longer the video gets the harder is it for the tool to preserve the identity and not fall into generating a too simplified face. Realism, consistency, presence of artifacts—it all directly depends on the tool and overall it’s getting better very quickly.

At Incode, we have detected that these kinds of attacks are happening more and more often. Fraudsters take someone else’s photo and turn it into a video so that it looks like a legitimate selfie-taking process. They want to make sure that the person is blinking, moving slightly, changing expressions, etc, to make it seem natural.

Other deepfakes

Of course, all of these deepfake methods can be combined and used together. Nothing stops fraudsters from doing this. For example, they might carry out a face swap and then animate the image, or perhaps they create a synthetic video, and then swap the face with the source identity. It all depends on what their goal is.

The list of deepfakes that we have described above is far from exhaustive. There are also deepfakes for image enhancement, for changing someone’s age, and for combining video with audio generation. There are also so-called shallowfakes, which include easier image/video manipulations from a human perspective—manual editing, static virtual backgrounds, watermarks, etc.

On Human Performance

As we discussed above, deepfakes are getting more and more realistic, consistent, and physically plausible. 10 years ago, anyone could spot a deepfake image. Five years ago, anyone could spot a deepfake video. Two years ago, only professionally trained labelers could identify deepfakes to some extent. Today, we can confidently say that even the most experienced labelers cannot consistently detect a deepfake just by looking at it.

To back up this statement, we ran an internal deepfake detection experiment. We asked five out of the 50 best labelers in our team to label a dataset consisting of deepfake images (face swaps and synthetic faces) and live images of real people. We were curious to see what was the upper bound of human performance at deepfake detection.

The results surprised us at first—the average labeler was able to detect 98.4% of deepfakes! However, we later realized what had happened. The average labeler also rejected more than 15% of real selfies. In other words, they were trying to find artifacts even where there weren’t any, and were unable to distinguish between deepfake artifacts and artifacts from low-quality cameras/lighting or other factors. Of course, such numbers are unacceptable for any kind of product.

In addition to this, for humans it is really hard to distinguish between a synthetic face, a face swap, or face animation. Even if we can tell that we are looking at a deepfake, accurately classifying the type of deepfake poses a significant challenge.

Given these complexities, labelers can only claim something based on multiple signals from each session, including but not limited to the score of the deepfake detection model. On the other hand, given this limited performance by top professionals, it becomes clear that it is impossible to uniquely rely on our visual perception when trying to catch a deepfake.

How Incode Defends Against Deepfakes

Fraudsters use similar techniques to impersonate someone today as they did years ago when carrying out physical presentation attacks. However, they now turn to deepfakes with the hope of achieving greater success than with traditional methods like replays or 3D masks.

Incode’s advanced deepfake detection is designed for a world where AI attacks are the norm, not the exception. We preempt the attack as opposed to simply reacting to it. Our technology combines multi-modal liveness detection with risk signal analysis from the user’s device, camera, and behavior to deliver passive, invisible defenses that don’t compromise the user experience.

Deepfakes represent the most advanced evolution of identity fraud to date, combining AI-generated media, device manipulation, and impersonation into scalable attack pipelines. Organizations can no longer rely on outdated spoofing defenses or surface-level liveness checks.

Learn more about Incode’s deepfake detection and biometric identity verification.

Author

Efim Boieru is a leading expert in AI and computer vision with over ten years of experience. He has developed advanced machine learning solutions for major tech companies including Huawei, Bosch, and MIT. At Incode, he leads the development of face liveness and deepfake detection technologies.

References

- Karras, T., Laine, S., & Aila, T. (2019). A style-based generator architecture for generative adversarial networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 4401-4410). https://doi.org/10.48550/arXiv.1812.04948

- Rombach, R., Blattmann, A., Lorenz, D., Esser, P., & Ommer, B. (2022). High-resolution image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 10674-10685). https://doi.org/10.48550/arXiv.2112.10752

- Gozalo-Brizuela, R., & Garrido-Merchan, E. E. (2024). A survey of generative AI applications. Journal of Computer Science, 20 (8), 801–818. https://doi.org/10.3844/jcssp.2024.801.818

- chaindrop. (2023, March 23). Will Smith eating spaghetti [Reddit post]. r/StableDiffusion. Retrieved from https://www.reddit.com/r/StableDiffusion/comments/1244h2c/will_smith_eating_spaghetti/

- FluxAI.art. (2025). Free AI face generator – Generate faces from text or photo. Retrieved July 29 2025, from https://fluxai.art/features/ai-face-generator

- Wikipedia contributors. (2025, July 1). Will Smith Eating Spaghetti test. In Wikipedia, The Free Encyclopedia. Retrieved July 29 2025 from https://en.wikipedia.org/wiki/Will_Smith_Eating_Spaghetti_test

- Nguyen, K. (2025, May 21). Expanding Vertex AI with the next wave of generative AI media models: Imagen 4, Veo 3, and Lyria 2. Google Cloud Blog. Retrieved from https://cloud.google.com/blog/products/ai-machine-learning/announcing-veo-3-imagen-4-and-lyria-2-on-vertex-ai

- Kazemi, V., & Sullivan, J. (2014). One millisecond face alignment with an ensemble of regression trees. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (pp. 1867-1874). https://openaccess.thecvf.com/content_cvpr_2014/papers/Kazemi_One_Millisecond_Face_2014_CVPR_paper.pdf

- Vidnoz. (2025). Free face swap online for realistic photo/video face swap; AI face animation app & online: Animate face from photo. Retrieved July 29 2025, from https://www.vidnoz.com/face-swap.html and https://www.vidnoz.com/ai-solutions/face-animator.html

- Zakharov, E. [Egor Zakharov]. (2019, May 21). Few-Shot Adversarial Learning of Realistic Neural Talking Head Models [Video]. YouTube. https://www.youtube.com/watch?v=p1b5aiTrGzY