Liveness Detection

Trust that only real, live people are verified with Incode’s advanced liveness detection technology.

Trust that only real, live people are verified with Incode’s advanced liveness detection technology.

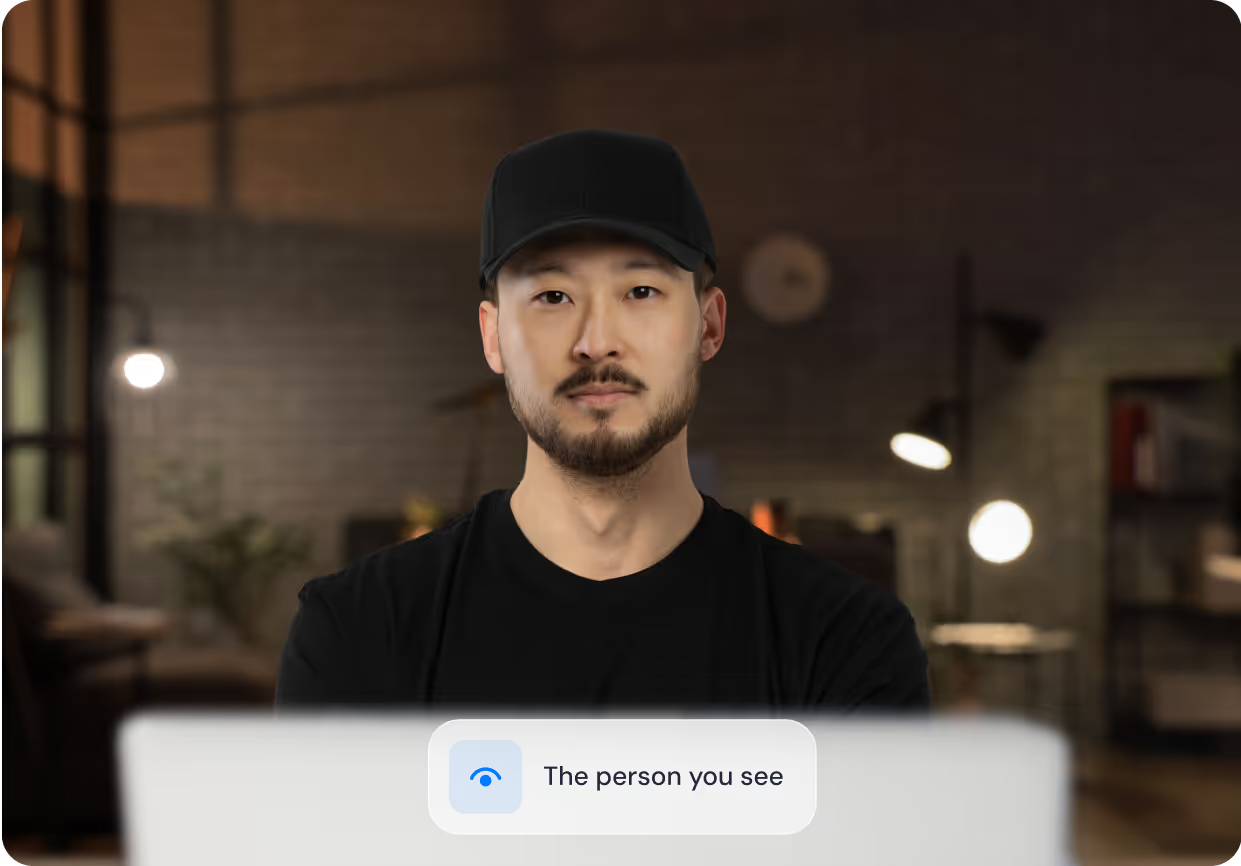

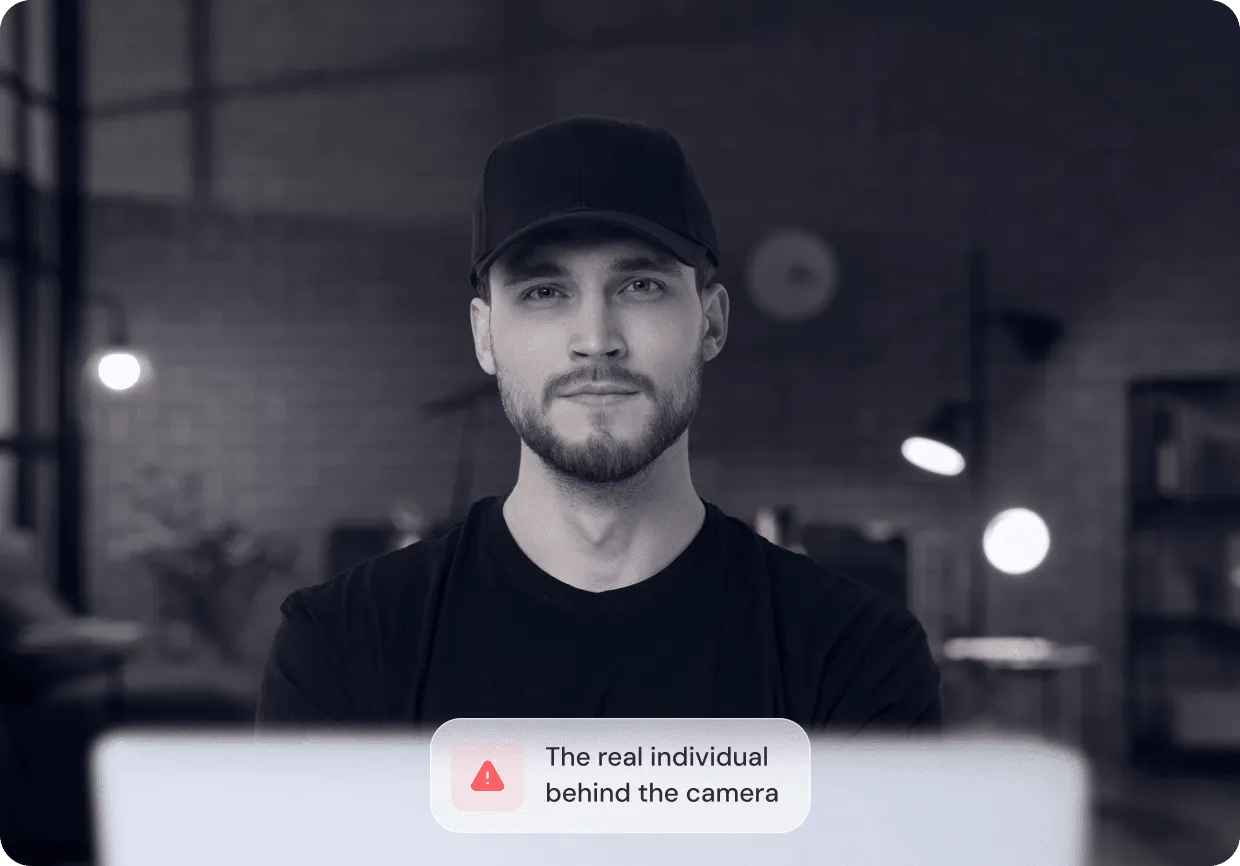

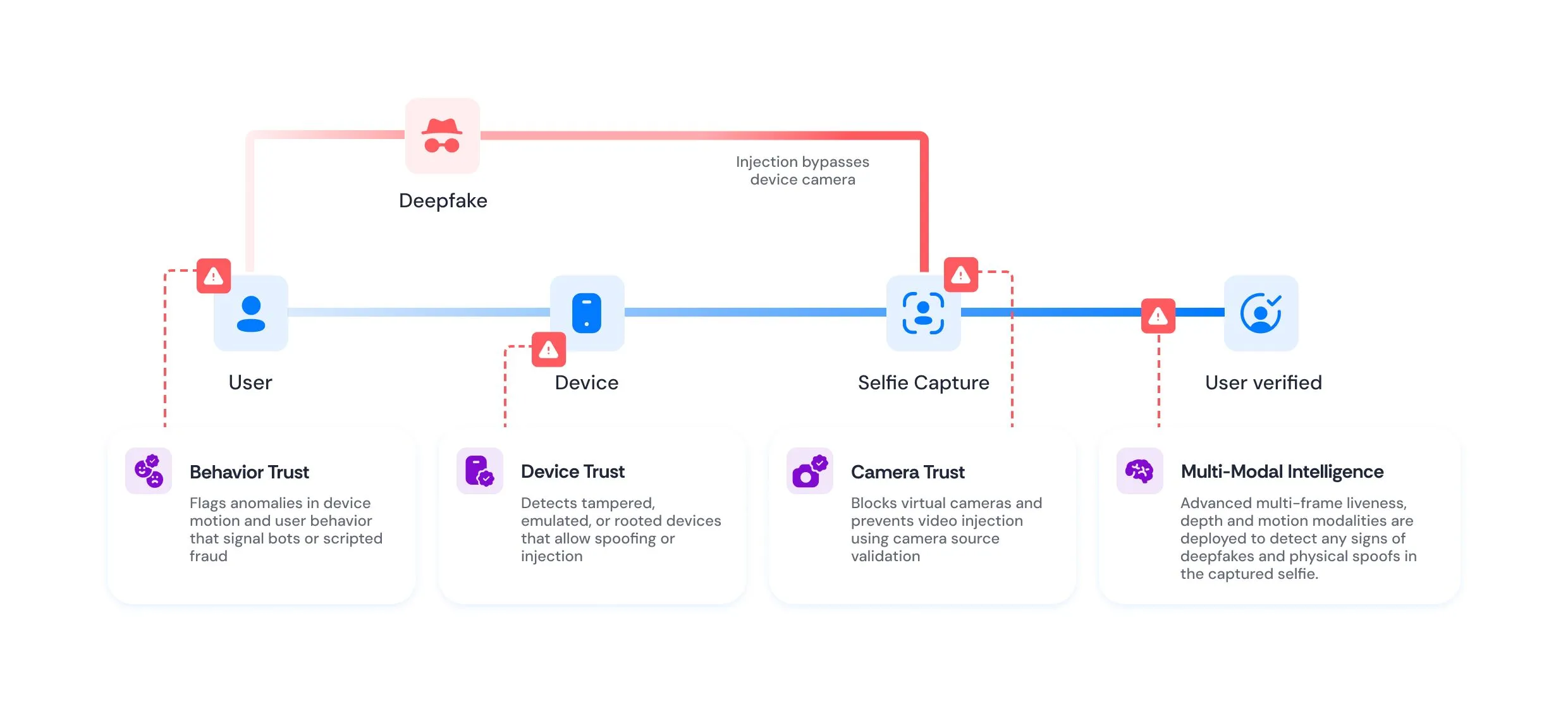

Generative AI is driving more sophisticated identity fraud and deepfakes, making it harder than ever to differentiate between legitimate users and fraudsters. The financial and reputational damage this can cause puts your business at serious risk. But with threats advancing at such an alarming rate, most identity verification approaches are unable to keep up.

Safeguard your business with instant liveness detection, starting today.

They could be manipulating your systems to appear as someone else, or they might not be a live person at all.

Counterfeit visual data that deceives camera-based verification systems. Physical presentation attacks involve manipulating visual data with high-quality screens or printed images to trick camera-based verification systems.

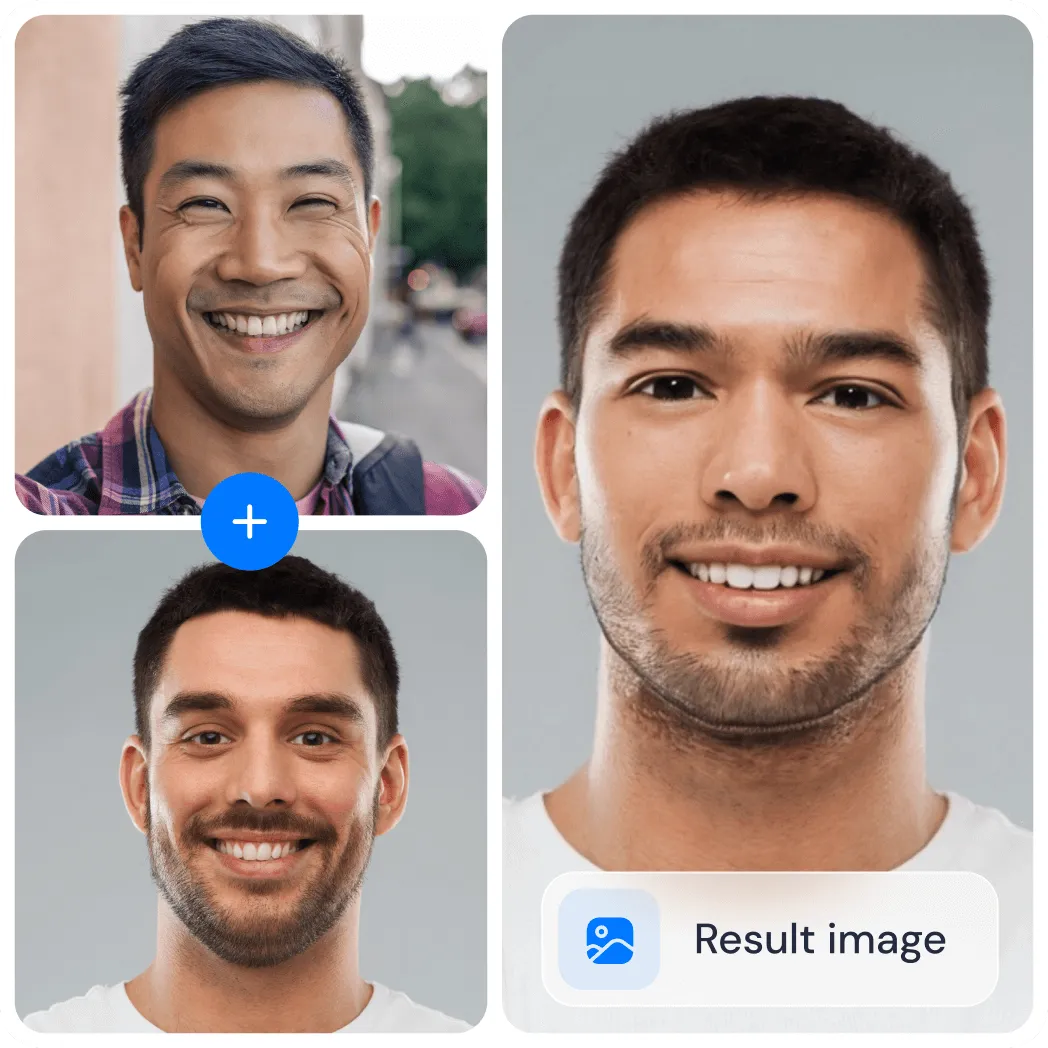

Replacing the face in a target image with a face from another source.

.svg)

Digitally blending facial features from two people to create a new synthetic face. in a target image with a face from another source.

.svg)

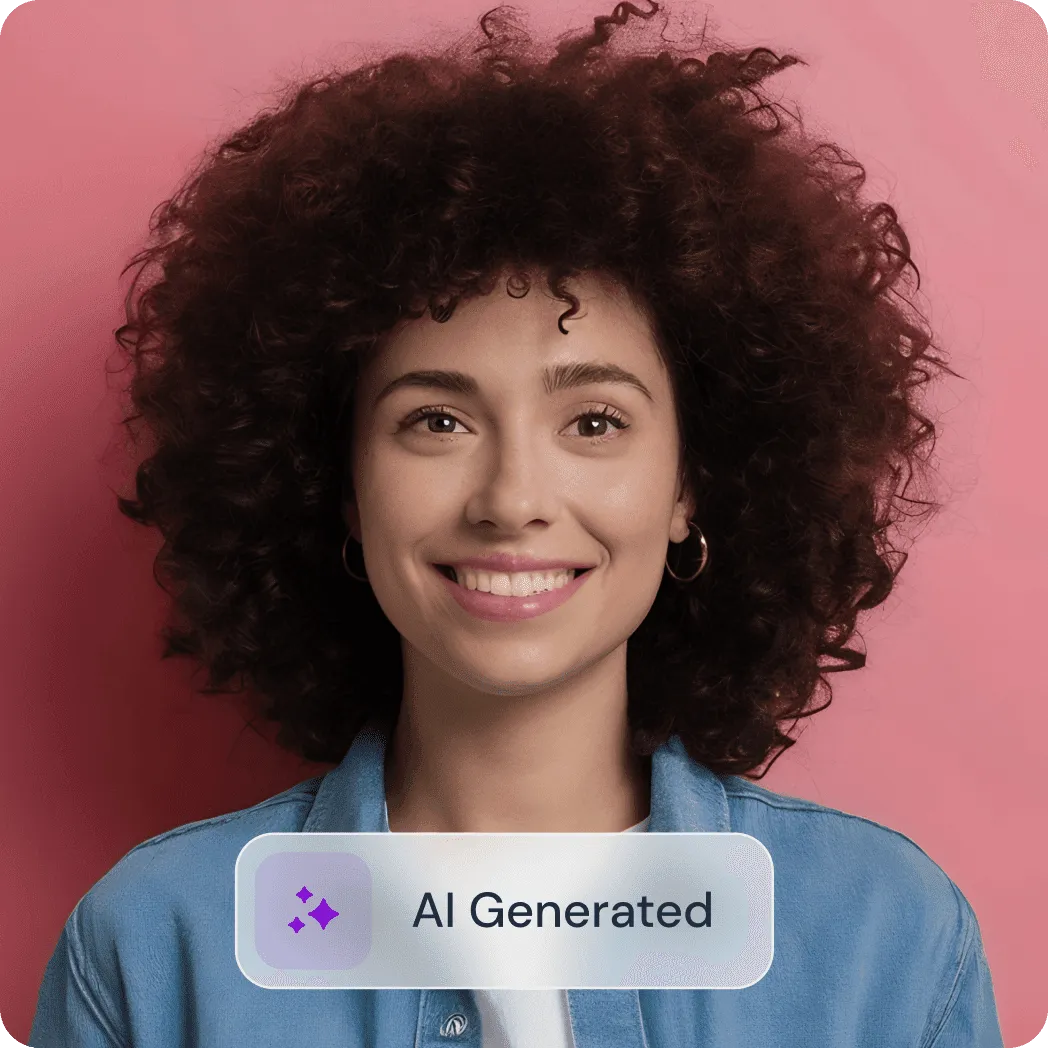

AI-generated images of people who don’t exist – all facial features are entirely synthetically generated, unlike face morphs that blend real people’s facial features to generate a new face.

Digitally replicating real facial movements and expressions to make manipulated videos appear authentic.

.svg)

Footage is replayed on high-resolution screens in front of the camera to simulate a live individual, making it difficult to distinguish between real and live people.

Counterfeit visual data that deceives camera-based verification systems. Physical presentation attacks involve manipulating visual data with high-quality screens or printed images to trick camera-based verification systems.

Flat, printed masks are used to mimic someone's face and deceive verification systems, but lack the depth and detail of a real human face.

Lifelike, three-dimensional masks made of materials like silicone are crafted to closely resemble a real person, making them harder to detect.

.svg)

Printed photos of a face are presented to the camera, attempting to pass off a static image as a live individual.

Printed images mounted on cardboard provide a sturdier, more rigid appearance in an effort to fool systems.

.svg)

Footage is replayed on high-resolution screens in front of the camera to simulate a live individual, making it difficult to distinguish between real and live people.

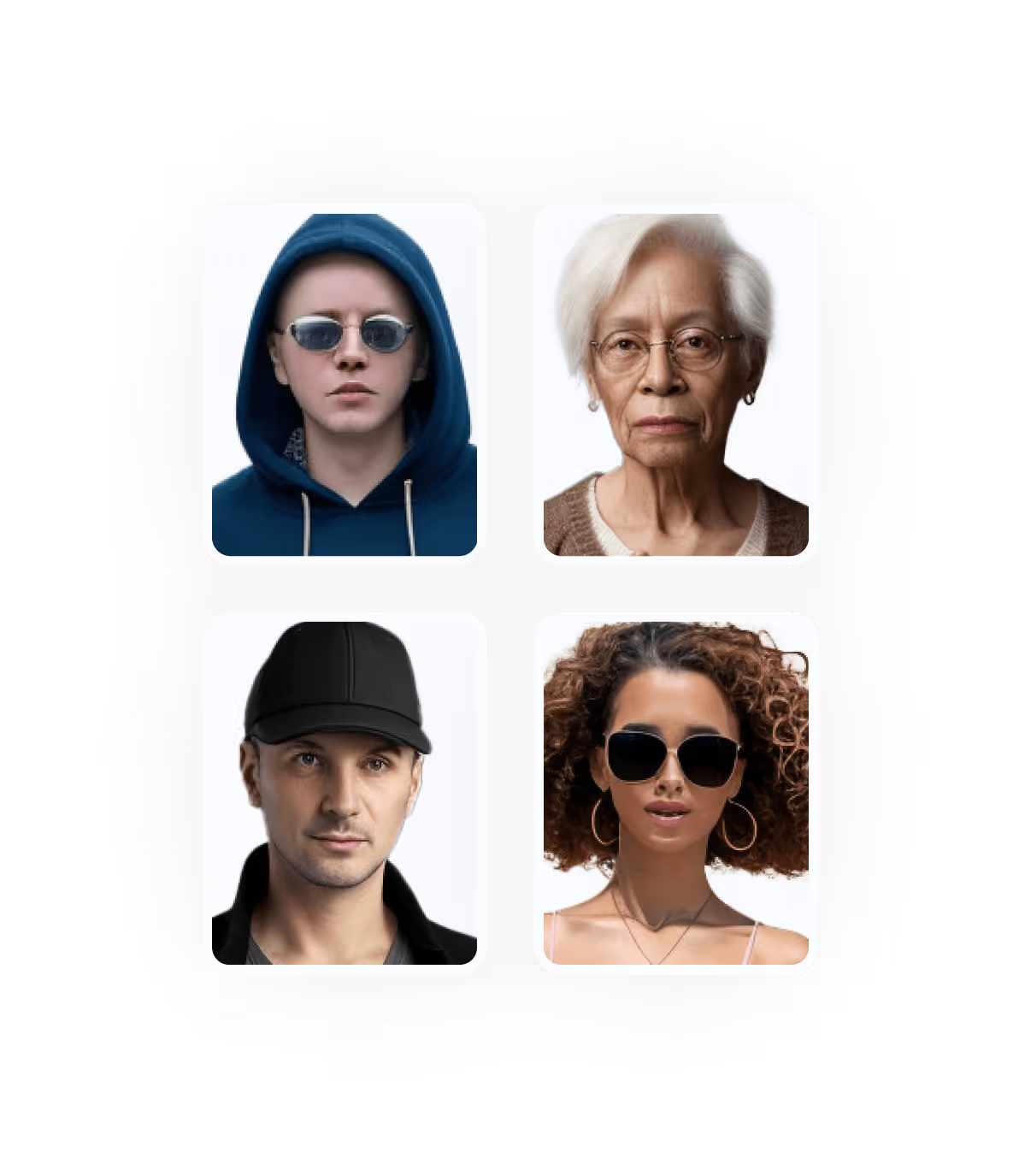

Facial modifications that target and aim to deceive recognition systems. Evasion or obfuscation attacks occur when individuals alter their appearance to make it harder for facial recognition systems to verify their biometric features.

Overly dramatic facial movements, like extreme smiles or wide eyes, are used to distort the face and confuse recognition systems

.avif)

.svg)

Items like hats, glasses, or scarves are used to block parts of the face, preventing systems from capturing a clear image.

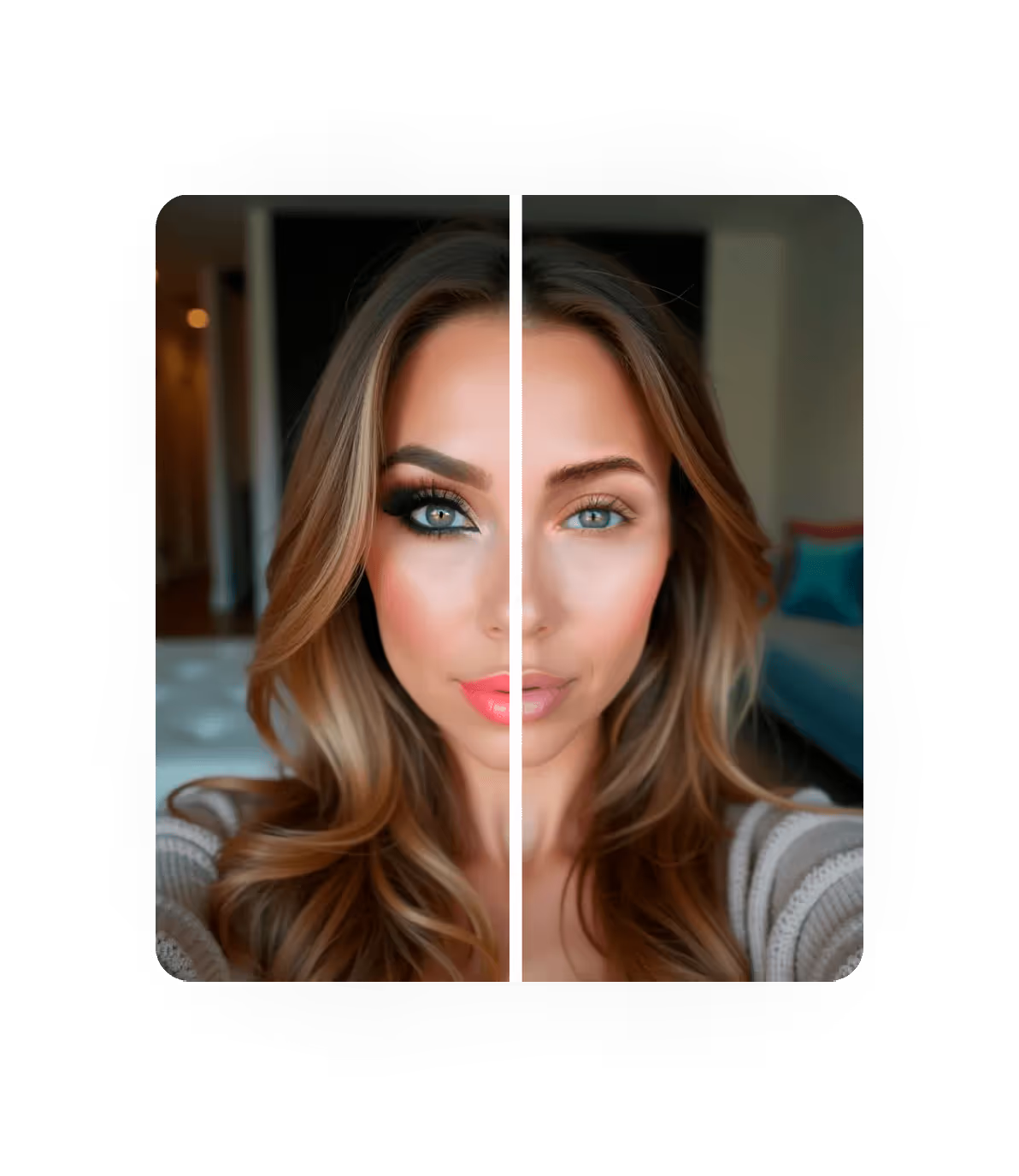

Complex makeup techniques are applied to change the appearance of key facial features, making it harder for systems to recognize the individual.

Our advanced liveness detection within Incode Deepsight verifies real people in manipulated or synthetic footage, with no friction or user interaction needed.

Discover how Incode Deepsight’s advanced liveness technology tackles fraud without impacting your user experience.

Unlike other providers who rely on third-party vendors, we build and own our entire technology stack.

Our AI/ML models, powered by deep learning and designed for identity verification, allow us to train on the latest document and biometric fraud vectors. This full control enables us to tailor our models to the unique needs of our clients, ensuring superior performance and flexibility.

Using advanced neural networks, including both standard Convolutional Neural Networks (CNNs) and cutting-edge Large Vision Models (LVM) and transformers, we train our models to achieve state-of-the-art results across various tasks.

Over nearly a decade, we’ve curated large, statistically representative datasets, so our models deliver balanced performance across variables like age, skin tone, and gender. Our in-house Fraud Lab has compiled over 1 million unique presentation attacks, from basic printouts to advanced 3D masks. We also generate synthetic data such as face swaps and synthetic faces, enhancing the robustness of our models.

Our internal testing environment is designed to be more challenging than real-world spoof attempts.

By testing our models against complex attacks, we ensure perfect detection rates in production. This way, we can prevent known fraud rings, repeat verification attempts, and other fraudulent behaviors before they impact your business.

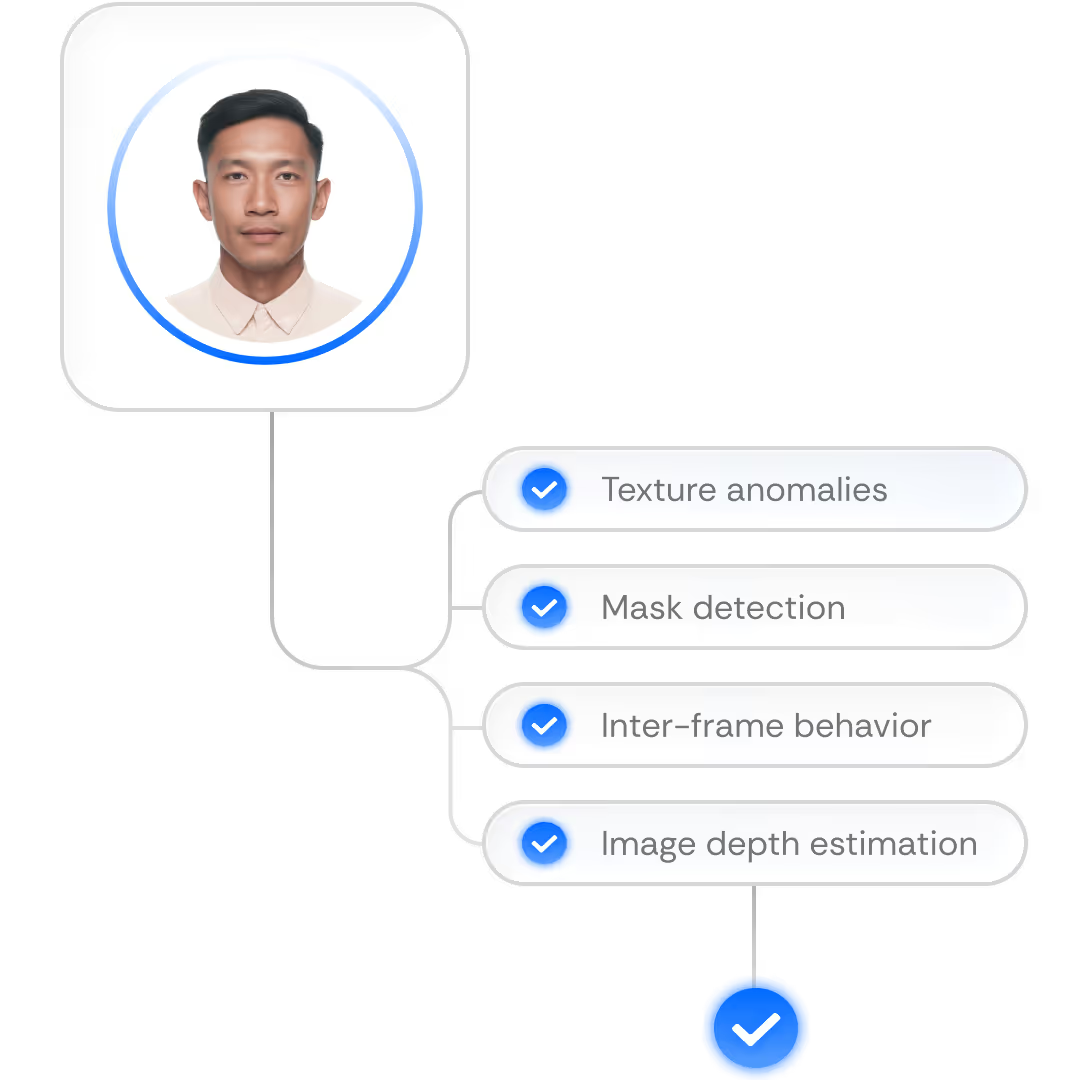

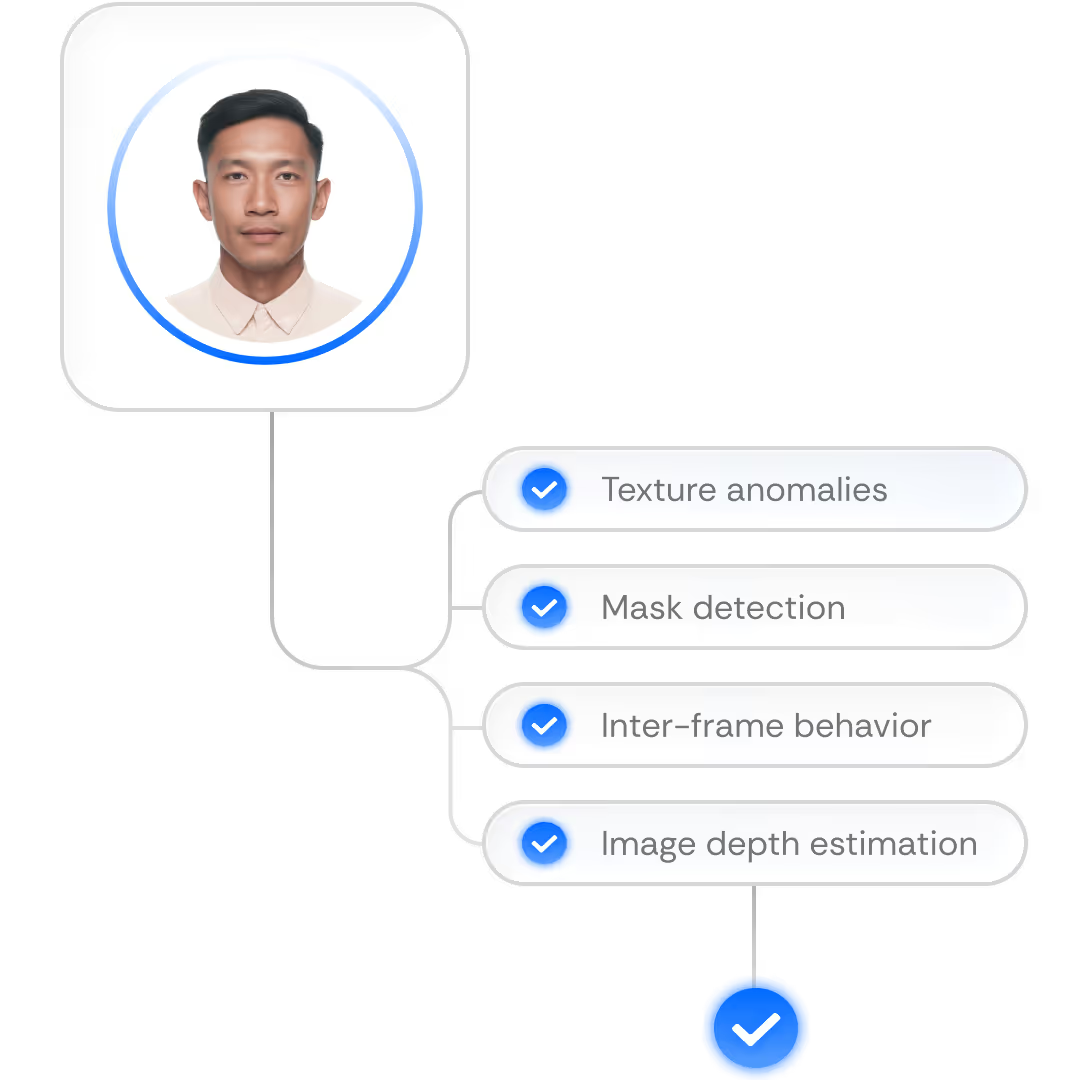

Within Incode's liveness, we employ multi-modal intelligence to maximize accuracy of liveness detection without slowing down the end-user experience.

.svg)

Other models on the market today rely on a single input, typically a 2D image. But they struggle to detect complex spoofing attempts, impacting their user experience, security, and identification accuracy.

Incode Deepsight enhances facial capture accuracy by selecting optimal frames and incorporating multiple modalities such as video, depth, and motion sensors. This approach mimics real-life interactions, reducing both false negatives and false positives.

Our liveness detection AI models are continuously refined by Incode’s Fraud Lab, where our team trains our proprietary AI technology in response to the latest emerging fraud techniques.

Unlike competitors who rely on third party providers, we can immediately train our in-house models to analyze input for emerging fraud types, ensuring these attacks are neutralized before they impact your business.

In 2024 the GA DDS performed an independent testing of Incode’s liveness detection according to iBeta level 2 protocols. The test achieved 0 false positives (false acceptance of fraudulent users) and 0 false negatives (false rejections of genuine users).

In 2023, Michigan State University’s Mobile Face Spoofing Database (MSU-MFSD) evaluated Incode’s liveness technology using a public dataset designed to test systems for spoofing attacks. The evaluation confirmed our system’s precision, achieving a 0% false positive rate and 0% false negative rate.

Verified reviews, certifications, and customer stories show the impact of Incode’s technology.

.avif)